Background & Collaboration

One key insight from lockdown was that culture needs resilience. In 2021, The Safe Street art festival was actively seeking mixed reality artists to get involved, recognising the high risk of cancellation if physical spaces were locked down again. This is exactly why and how Dave and I became involved in a street art festival - our mixed reality work offered a solution that could continue even if traditional festival spaces became inaccessible. We must develop ways to transmit culture that aren't dependent on traditional galleries or physical spaces.

Project Approach

Working with Dave Stitch, we begin projects by defining clear aims before exploring long-term ambitions. With three locations involved - I'm based in the Midlands, Dave is down south, and the festival is in Olomouc, Czech Republic - we started by exploring Olomouc through Street View and VR.

We've now completed several Extended Reality (XR) projects together, including Cubing the Sphere at VRHAM (Hamburg festival) earlier this year, which provided valuable learning experiences. For the past year, our main concept has been composing audiovisual work primarily for Extended Reality experiences.

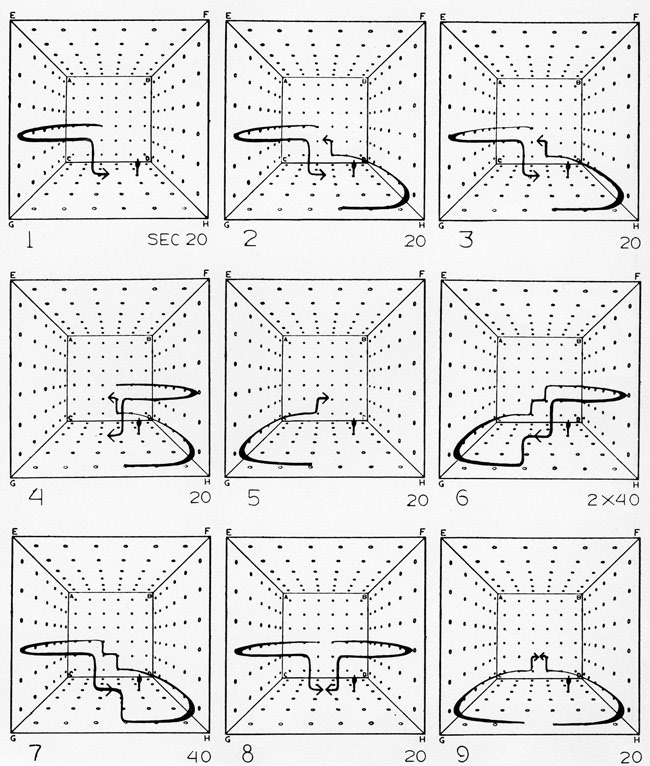

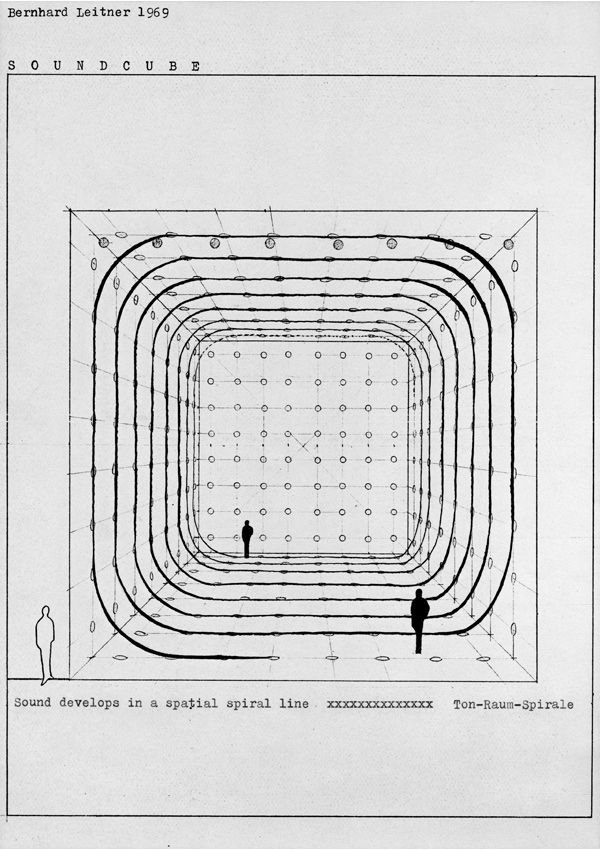

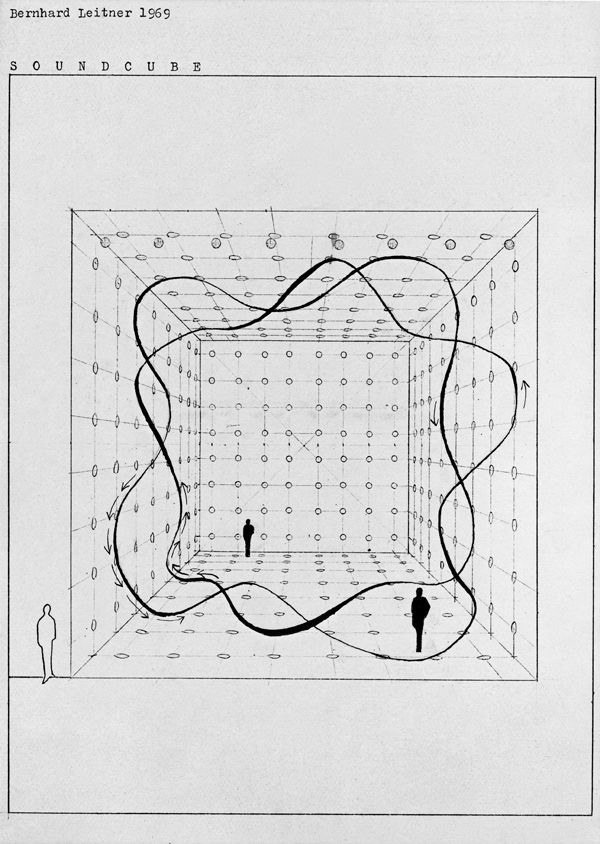

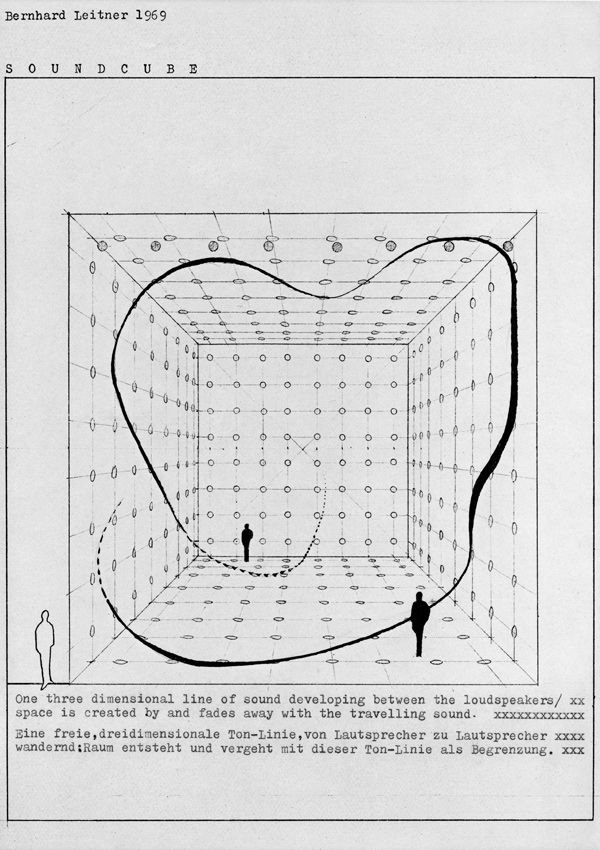

Cubing the Sphere, VRHAM 2021

Inspired partly by Bernhard Leitner's Soundcube, we explore how sound can be warped around 3D space. With modern technology, it's remarkable that his work can now be built in VR quickly using tools like Spoke/Hubs.

We chose Spoke/HUBS as our platform for these VR concepts. While reasonably simple, it offers all the spatial sound options we need. The visual limitations actually force focus on audio and push creative visual solutions.

For DENISOVA, we wanted to develop our concept by increasing from the three audiovisual stems used at VRHAM to 4-5 stems. Though we haven't yet managed to record compositions in 8D, we're making incremental improvements.

The concept remains consistent: audiovisual stems are positioned relative to a central anchor point with spatial audio configuration. Within HUBS, we set higher audio drop-off while increasing volume on all stems. This allows audiences to appreciate the complete composition from a central position or explore individual stems for focused listening.

As the public moves through this virtual space - in VR or AR - they become part of the composition, controlling output through movement alone.

Audio Process

Account from Dave Stitch on the composition approach:

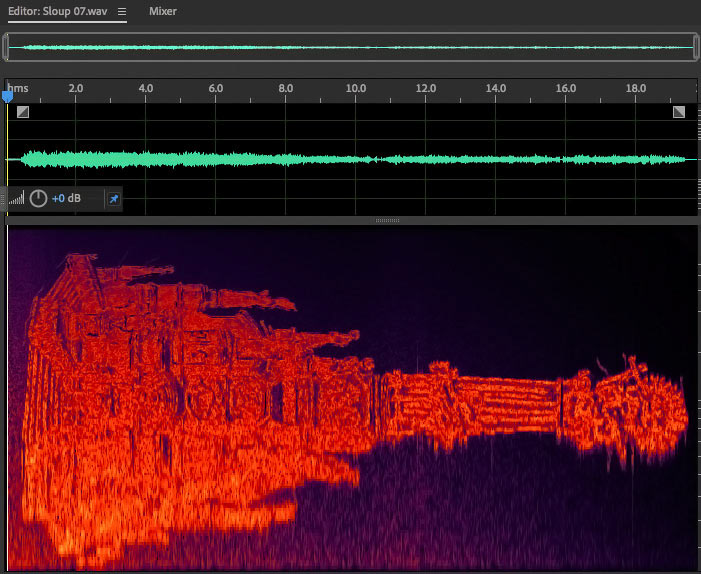

After exploring Olomouc digitally, I researched the city, known for its ornate fountains and monuments. The gothic 'Sloup Nejsvětější Trojice' (Column of the Holy Trinity) stood out - a large monument built to celebrate the plague's end, striking a dark, fractal-like pose in the town square.

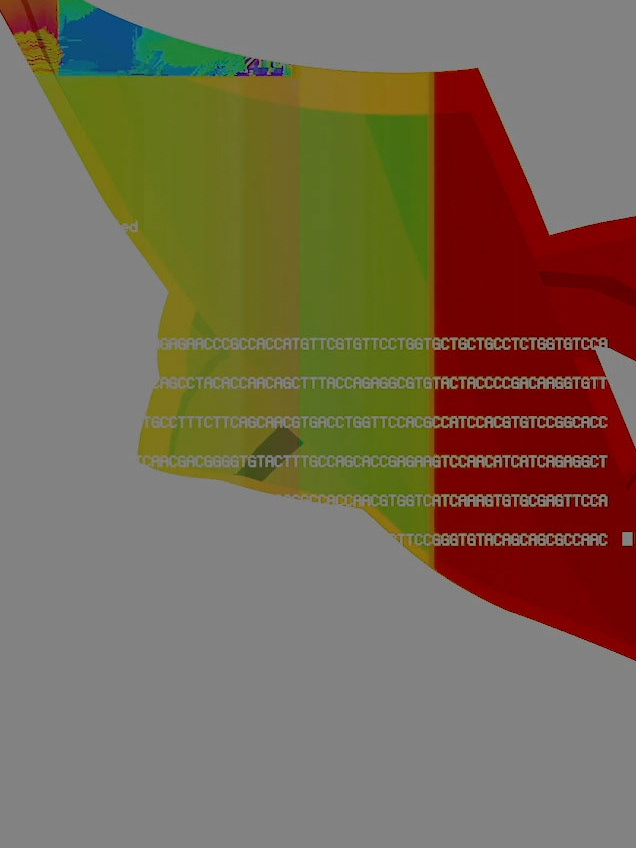

I captured this image and converted it into a spectrogram using various software. Spectrograms visualise sound bandwidth frequencies as images, with colour representing amplitude and frequency positioned on the graph. I transformed this image of the Holy Trinity Column into sound.

The Sloup Nejsvětější Trojice spectrogram

The The Sloup Nejsvětější Trojice statue in Olomouc

Based on the Holy Trinity monument, I considered my own (less) holy trinity:

Kick. Snare. Hat

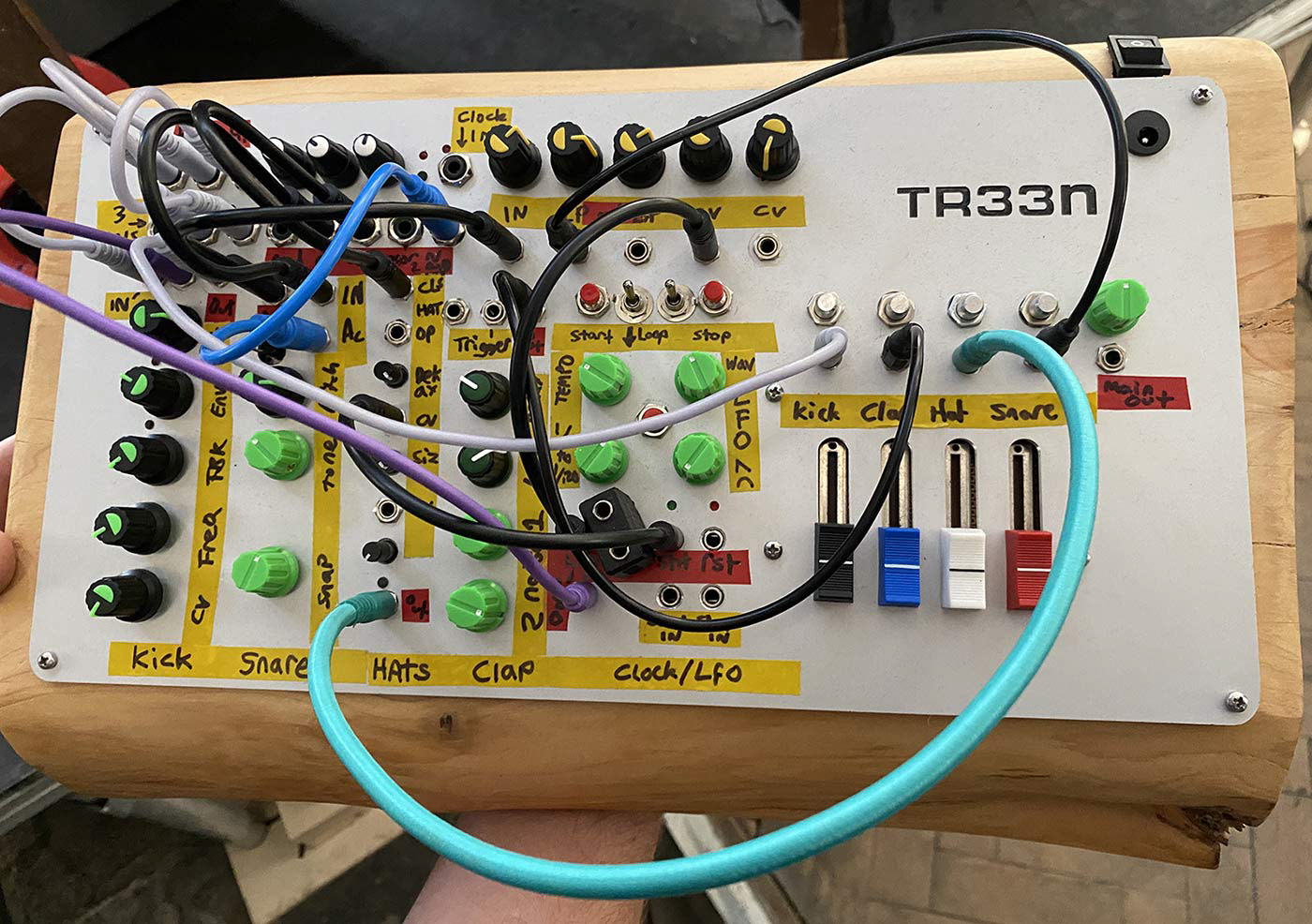

The holy trinity of funk drumming! This gave me an excuse to use my freshly made custom drum machine - the TR-33N.

Learning from our previous 360-degree stem experiments for Cubing the Sphere, I knew stem synchronization couldn't be perfectly accurate, so I used the drum machine to compose arhythmic drumbeats similar to experimental jazz. The listener becomes the space between notes.

I recorded three stems of Kick, Snare, and Hats. To maintain arhythmic timing (challenging on a drum machine), I set a 'Cronograf' clock timer to stop position and triggered beats manually, creating loops at 124bpm sitting outside the grid.

Testing the new TR33N drum machine out on DENISOVA

The TR-33N's modular nature allowed me to trigger an LFO simultaneously, affecting various sound elements' control voltage. The results were satisfyingly un-drum-like.

After recording, I contemplated augmented reality's nonlinear nature - how we can create work visible in the future despite not existing as matter. This led me to consider differences between our digital 3D work and traditional sculptures.

The space we operate in exists almost outside time - artwork must be summoned to be witnessed. I wondered what showing our work to Olomouc's people during the Holy Trinity Column's construction period would be like.

With this in mind, I set about writing music of the future to be experienced by people of the past. What would Olomouc's medieval forefathers have thought of modern technology and Extended Reality?

Visual Process

I wanted visuals to truly impact audiences, allowing them to walk into the composition and feel immersed in the artwork. As with all our projects, audio informs and drives animations - this time, I wanted people to get inside these animations and see the sounds.

The theme of impressing Olomouc's medieval forefathers was important - I wanted futuristic feel while retaining traditional ideals. For the street art festival context, I also sought shapes complementing graffiti writing.

After virtually visiting the Holy Trinity Column in Olomouc, I realized I could use VR within the design process itself. For this project, VR became the starting point.

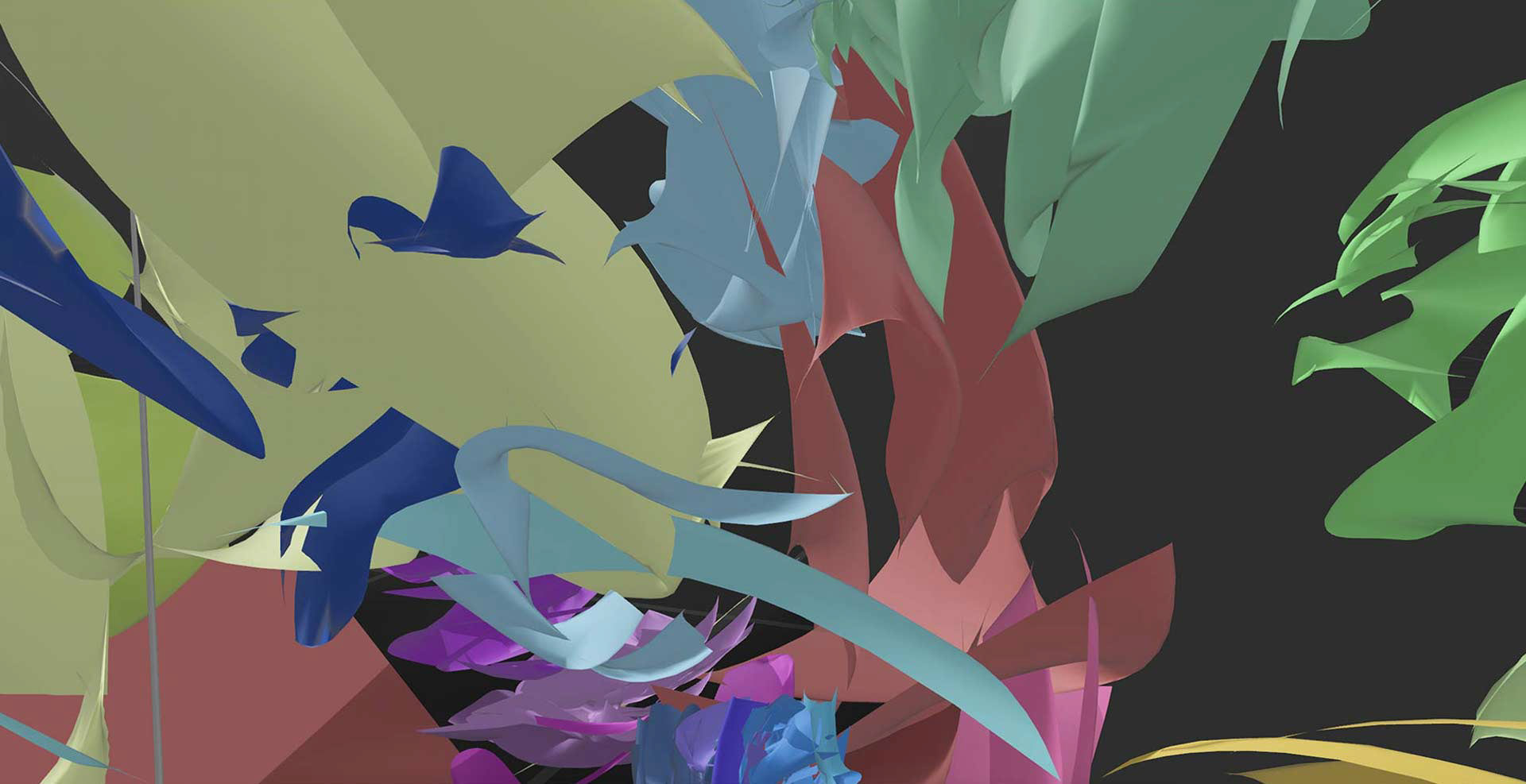

While I've struggled with Tilt Brush since Google discontinued it (mainly due to limited import/export features), Gravity Sketch (a 3D design platform typically for product designers) allowed me to create spontaneous results fitting our aesthetic.

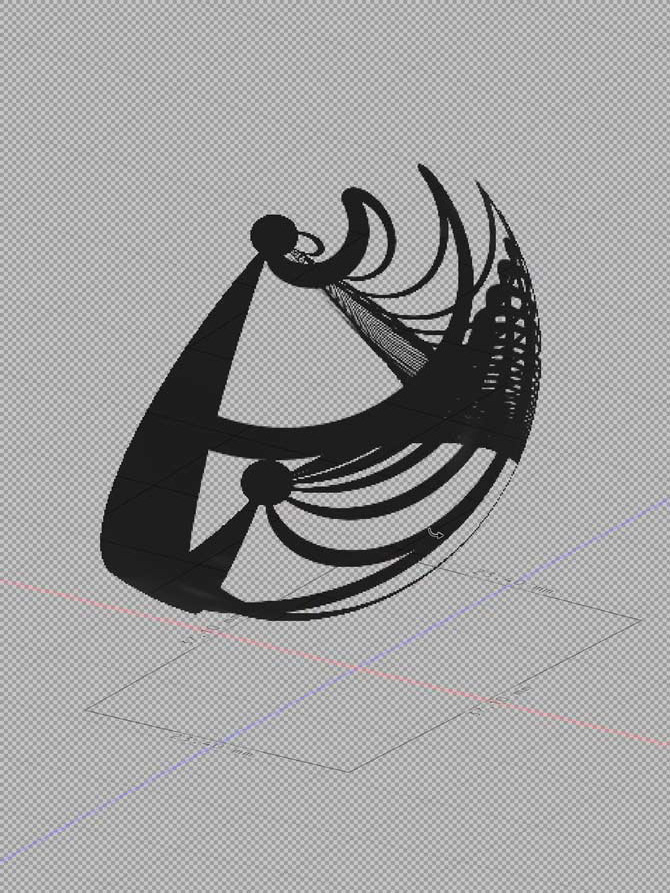

Early outputs from Gravity Sketch

VR Painting Process

Painting in VR proved incredibly liberating. I developed a defined process:

1. Load each audio stem on loop

2. Enter Gravity Sketch in virtual reality

3. Paint in 3D while hearing sound

4. Change colour when sound goes quiet

5. Continue this cycle

This creates quick, spontaneous, intuitive, and colourful results - usually 4-5 seconds of audio painting before colour changes.

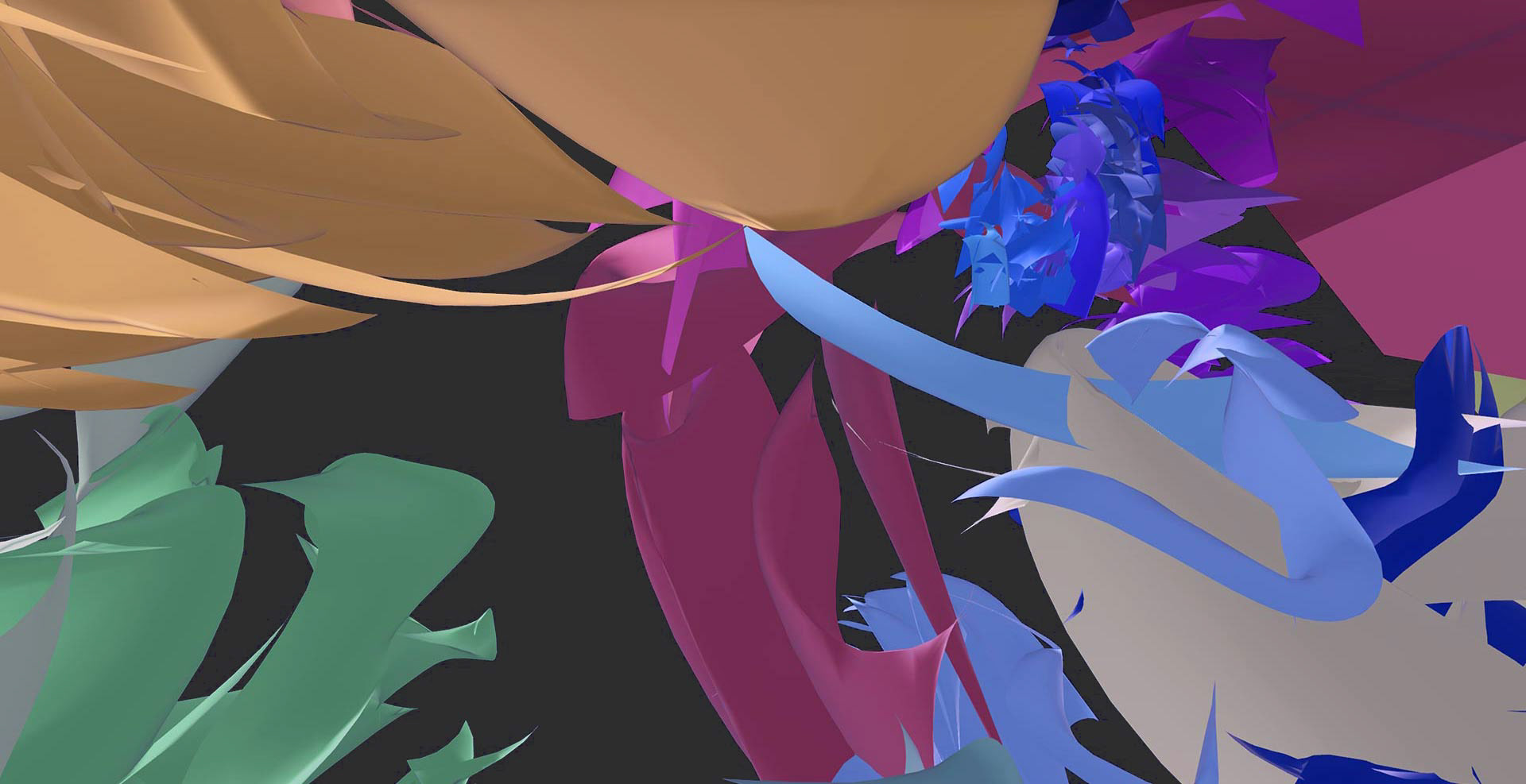

The Gravity Sketch outputs were 3D sculptures, some quite unexpected. While some appeared skeletal and artificial, others showed natural synergies, sometimes looking floral.

VR painting felt liberating and hands-on - I was forced to physically move to music in unexpected ways. One downside of digital artistry is feeling desk-bound; this process felt more like dance or yoga than any previous digital creative work.

From this process, I created six 3D sculptures - one per audio stem. These were stripped back and converted to AR format (.usdz). For the augmented reality component, I made significant quality compromises - sculptures required decimation (reduced polygon count) and material textures were mostly incompatible and needed simplification. Mobile devices running on 4G were one limitation; the Scavengar AR app's 20MB file size limit was another.

Using Gravity Sketch to paint in 3d - on the Oculus Quest 2

For most stems there was usually only 4-5 seconds of audio to paint to, before I had to change colour

Augmented reality - audio reactive 3d sculptures hauntingly disappear & reappear in the viewers reality

Augmented Reality Implementation

I wanted each 3D sculpture perfectly synced with audio - challenging since the composition wasn't short enough to export from Blender with long, perfectly synced animation. The unexpected solution was animating within Reality Composer, keeping file sizes low while offering tighter audio-visual control.

Revisiting our vision of impressing Olomouc's medieval forefathers and exploring summoning/witnessing themes, I made each sculpture appear and disappear in sync with audio. This tied into Olomouc's gothic heritage with haunting virtual sculptures. When "summoning" AR through iOS devices and overlaying on reality, these became ghostly apparitions installed throughout Olomouc using the Scavengar app.

Testing the AR on iOS

Virtual Reality Component

The VR component was exciting since we could achieve more than with AR. Making the same 3D sculptures react to audio created separate narratives for each audiovisual stem.

I brought the VR-sculpted 3D objects into After Effects, letting audio manipulate them. Harder sounds look like they sound; the same applies to floating, softer sounds. This creates constant dialogue between audio and visuals, with both components informing each other throughout the creative process.

Learning from our last project - where Dave discovered he needed asynchronous beats - I knew I needed 360-degree equirectangular video output for full immersion.

The VR component became DENISOVA VOID, based on Denisova Square in Olomouc, presenting a dystopian glimpse of the city at an unknown future point. As Dave folded audio to create digital water fountain sounds, I used simple particle systems in Spoke to emulate the water fountain in Denisova Square.

Converting Desisva (Echo Bass) to equirectangular 360 degree clip - for VR.

Exhibition & Testing

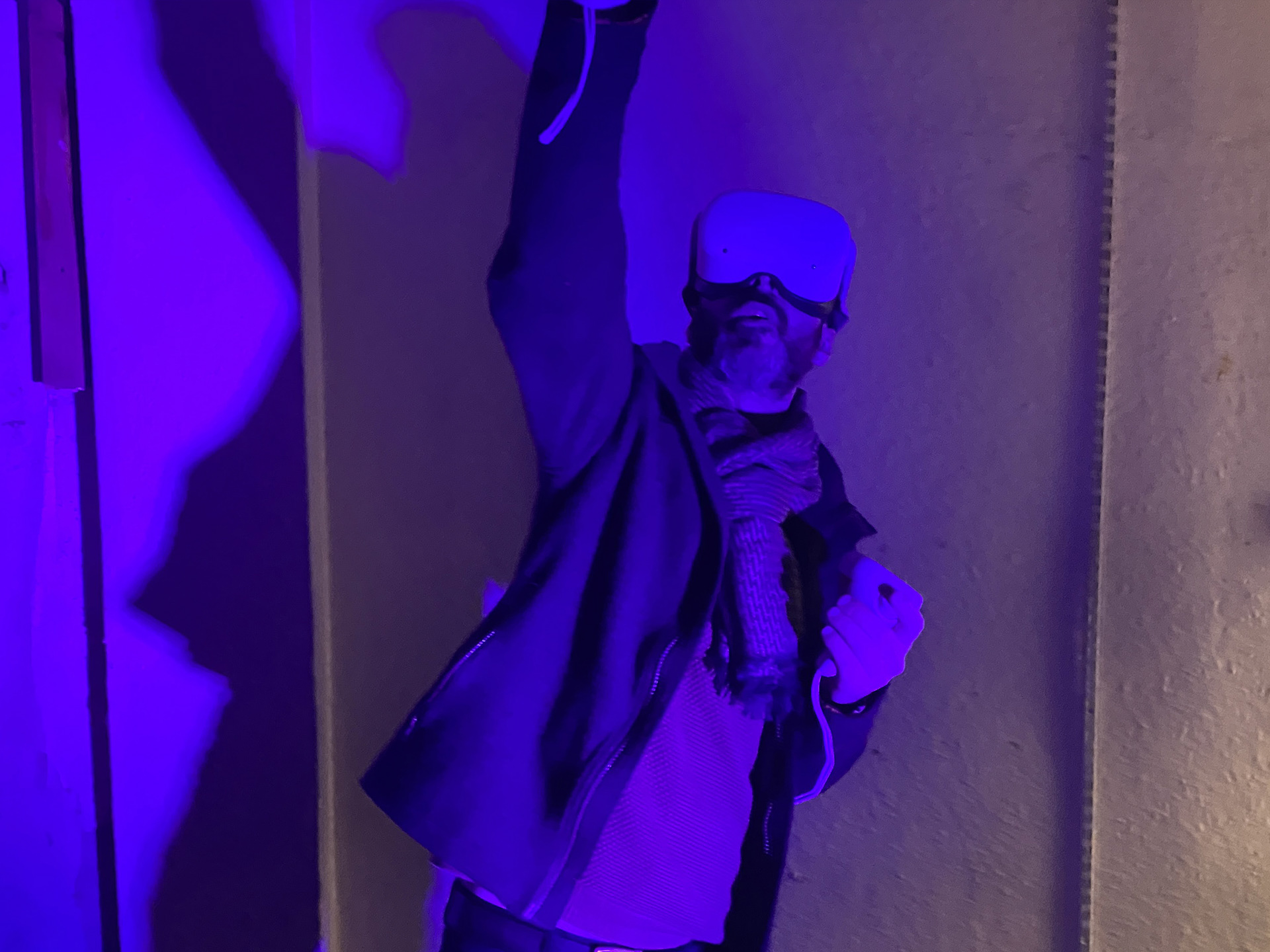

We tested DENISOVA VOID at David C Hughes' VR night at Granby St in Leicester as part of Leicester Design Season, providing valuable user feedback and refinement opportunities.

DENISOVA being user tested at the Interact Digital Arts VR night

Discover more and try the AR/VR experience on the main project page.